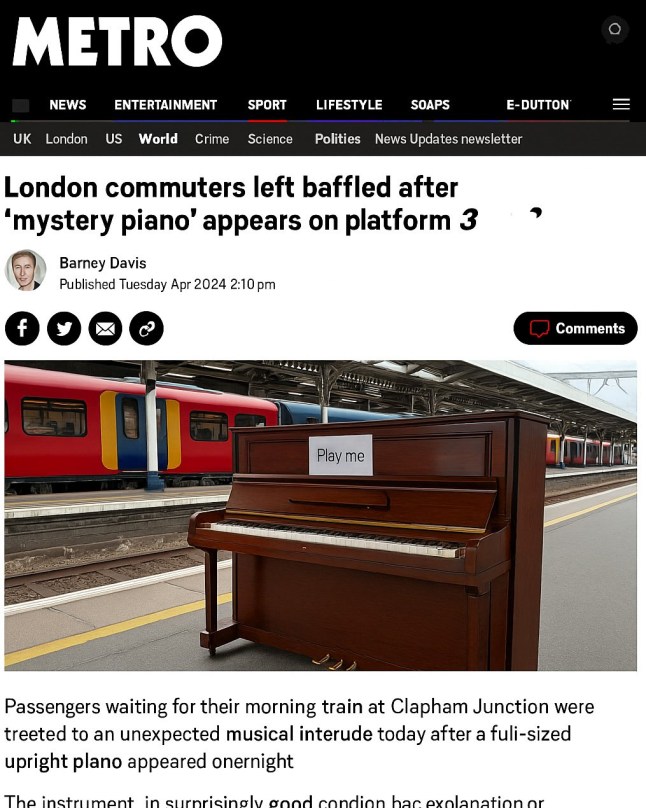

Last year, Metro’s night news editor, Barney Davis, wrote a story about a piano appearing on a London railway station platform.

Commuters were confused, to say the least, but apparently didn’t mind the musical interlude.

Except, Davis didn’t – he wasn’t even at the Metro in 2024. And commuters at Clapham Junction were never ‘baffled’ by a ‘mystery piano’, given that this never happened. (Davis can also spell words correctly.)

This is a fake news story that we asked ChatGPT, an artificial intelligence (AI) chatbot, to generate today. It took only a minute or two to make.

More than 34million AI images are churned out a day, with the technology constantly getting better at producing lifelike photos and video.

‘AI imagery doesn’t just fake reality, it bends context, and even a harmless-looking photo can be used to drive a dangerous false narrative,’ said Naomi Owusu, the CEO and co-founder of live digital publishing platform Tickaroo, told Metro.

‘Once fake imagery is seen, it can install a “new reality” in people’s minds, and even if it’s proven false later, it can be incredibly hard to undo the impression it leaves.’

Experts told Metro that there are still a fair few ways to spot an AI fake – for now, at least.

1. It’s all in the details

Images made using text-to-image algorithms look good from afar, but are often far from good, Vaidotas Šedys, the chief risk officer at the web intelligence solutions provider Oxylabs, said.

‘Hands are often the giveaway,’ Šedys said. ‘Too many fingers, misaligned limbs, or strange shadowing can suggest the image isn’t human-made.’

Take the bogus Metro story, the copy is riddled with spelling mistakes (‘fuli-sized’ and your favourite section, ‘E-Dutton’) and the font looks smeared.

Or with the above viral TikTok clip, showing bunnies bouncing on a trampoline, the furry animals blend into one another and warp at times, which they definitely don’t do in real life.

Julius Černiauskas, Oxylabs CEO, added: ‘You can typically identify a video fake when it has unnatural movements and poor quality, grainy images.’

2. ‘Robotic’ writing

Okay, first of all—no judgement here. We’re all fooled by AI images. It’s not just you, but parents, politicians and CEOs. The result? It means the tech is doing exactly what it was designed to do: look real.

The above is our attempt to write in the same way that chatbots now infamously do, a style that Šedys is ‘overly polished and robotic’.

‘Look for content that lacks natural rhythm or relies on too many generic examples,’ he adds.

3. Deepfakes vs AI

To view this video please enable JavaScript, and consider upgrading to a web

browser that

supports HTML5

video

Deepfake technology is software that allows people to swap faces, voices and other characteristics to create talking digital puppets.

‘Some people deploy deepfakes to scam or extort money, fabricating compromising “evidence” to deceive others,’ Černiauskas said.

‘In other cases, deepfakes are used for spreading misinformation, often with political motives. They can destabilise countries, sway public opinion, or be manipulated by foreign agents.

‘There are even those who create deepfakes just for the thrill, without any regard for the damage they may cause.

‘Just because we haven’t fallen victim to deepfakes, doesn’t mean it won’t happen as AI continues to improve.’

4. Use reverse image search and other tools

ChatGPT and other synthetic image-makers excel at faces as they’re examples of generative AI, so spend their days gobbling up online data.

Researchers have found that faces created by AI systems were perceived as more realistic than photographs of people, called hyper-realism.

So, ironically, a giveaway an image isn’t genuine is that someone’s face look’s a little too perfect.

Owusu said: ‘It’s not about trusting your eyes anymore, it’s about checking your sources. We can’t fact-check pixels alone.

‘Use common sense: ask yourself how likely it is that what you’re seeing is real, and how likely it is that someone would have captured that exact moment? If it looks too Photoshopped, it might be AI.’

If you’re suspicious, do a reverse image search, which will find other places on the internet the photo exists.

‘If it can’t be traced, it should be treated as unverified, no matter how compelling it looks,’ Owusu said.

Services like ZeroGPT can rifle through text to see if it’s AI jibber jabber.

Check the metadata – the digital fingerprints embedded in photos, documents and web pages – if something is raising your eyebrow.

5. Curate your social media feeds

In May, four of the top 10 YouTube channels by subscribers featured AI-manufactured material in every video, according to an analysis by the Garbage Day newsletter.

Some AI content creators don’t specify that what their followers are looking at is a phoney, whether by saying in the caption or clicking a ‘Made with AI’ tag that some social media platforms have. Some accounts are nothing more than AI bots, spewing inauthentic posts and images.

This shows how important it is to be intentional with who you follow, especially with news outlets, all three experts we spoke with said.

Why does this matter?

Free and easy to use, countless people now turn to AI to help write emails, plan their weekly budgets or get life advice.

But many people are also generating with impunity a flood of fake photos and videos about world leaders, political candidates and their supporters. This conveyor belt of misinformation can sway elections and erode democracy, political campaigners previously told Metro.

The International Panel on the Information Environment, a group of scientists, found that of the more than 50 elections that took place last year, AI was used in 80%.

It won’t be long until counterfeit images and video become ‘impossible’ to differentiate from authentic ones, Černiauskas said, further chipping away at the public’s distrust in what’s in front of them.

‘Don’t take it for granted that you can spot the difference with the real thing,’ he added.

As much as governments and regulators may try to get a grip on AI hoaxes, the ever-advancing tech easily outpaces legislation.

‘Don’t trust everything you see on your screen,’ said Šedys. ‘Critical thinking will be your best defence as AI continues to evolve.’

Get in touch with our news team by emailing us at webnews@metro.co.uk.

For more stories like this, check our news page.